Success Metrics in Software Projects: What, When, and How to Measure

November 24, 2025 - 20 min readSuccess Metrics in Software Projects: What, When, and How to Measure

"What gets measured gets managed" - This classic management principle is more relevant than ever for software projects. However, there's significant confusion in the software development world about what should be measured, which metrics truly matter, and which are merely "vanity metrics."

How do you measure the success of a software project? By lines of code? Sprint completion rate? Or customer satisfaction? Actually, the answer is: All of them - but in the right context and at the right time.

In this comprehensive guide, drawing from Momentup's experience across hundreds of projects, we'll walk you through which metrics to measure in software projects, when to use them, and how to interpret them step by step.

Why Is Measuring Metrics So Important?

A metric-driven approach provides you with:

1. Objective Decision Making

- Make decisions based on data, not gut feeling

- Provide concrete evidence in team discussions

- Report to stakeholders with numbers

- 2. Early Warning System

- Detect problems before they grow

- Forecast the future with trend analysis

- Respond quickly in crisis situations

- 3. Continuous Improvement

- Understand what works and what doesn't

- Measure the impact of small changes

- Take action to increase team performance

- 4. Accountability

- Team tracks its own performance

- Individual and team goals become clear

- Success becomes measurable

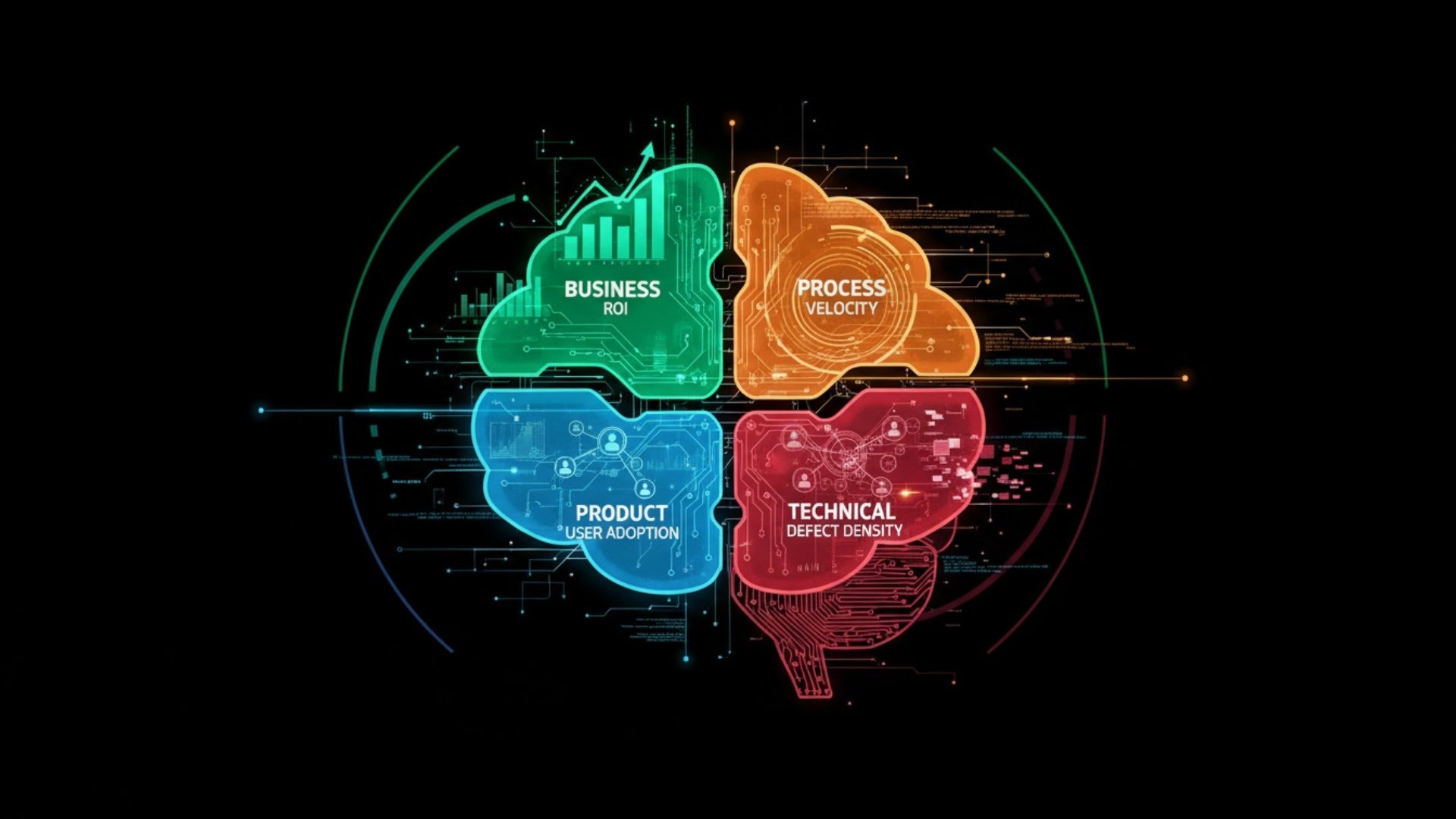

Metric Categories: What to Measure at Which Layer?

Metrics in software projects can be organized into 4 main layers:

1. Business Metrics

Top level - What value does the project add to the business?

2. Product Metrics

How are users using the product, do they find value?

3. Process Metrics

How efficiently is the development process working?

4. Technical Metrics

How is code quality and system health?

Each layer feeds into the others. For example, poor technical metrics (high bug rate) affect process metrics (low velocity), which affect product metrics (low adoption), ultimately impacting business metrics (low revenue).

1. Business Metrics

ROI (Return on Investment)

What: Return on investment in the project

When: Goal setting at project start, evaluation at end

How to Calculate:

ROI = (Project Gain - Project Cost) / Project Cost × 100

Example:

Project Cost: $100,000

Annual Gain: $150,000

ROI = (150,000 - 100,000) / 100,000 × 100 = 50%

Practical Tips:

- Calculate not just direct costs but opportunity cost

- Include time factor (payback period)

- Note soft benefits (employee satisfaction, brand value)

Time to Market

What: Time from idea stage to market launch

When: Critical for competitive advantage projects

How to Measure: Number of days from initial concept approval to production deployment

Benchmark:

- MVP: 2-4 months

- Medium-scale feature: 1-3 months

- Enterprise application: 6-12 months

Improvement strategies:

- Use Agile/Scrum methodology

- MVP approach (start minimal, expand iteratively)

- DevOps and CI/CD automation

- Parallel development through outsourcing

Revenue Impact

What: Project's direct impact on company revenue

When: For revenue-generating projects

How to Measure:

Direct impact

- New Sales

- Upsell/Cross-sell

- Retention Improvement

Indirect impact:

- Operational cost reduction

- Customer acquisition cost reduction

- Brand value increase

Cost Savings

What: Money/time saved thanks to the project

When: Automation and efficiency projects

How to Calculate:

Monthly Savings = (Old Process Cost - New Process Cost) × Repetition Count

Example:

Manual invoice processing: 30 min/invoice, 100 invoices/month

After automation: 2 min/invoice

Employee hourly cost: $50

Savings = (30-2) × 100 × (50/60) = $2,333/month

Annual savings = $28,000

2. Product Metrics

Adoption Rate

What: What percentage of target users are actively using the product?

When: First 3-6 months after launch

How to Calculate:

Adoption Rate = (Active Users / Total Target Users) × 100

Healthy targets:

- First month: 20-30%

- 3rd month: 50-60%

- 6th month: 70-80%

Low adoption causes:

- Complex onboarding

- Insufficient training/documentation

- Unclear product value

- Poor UX

- Technical issues

Feature Usage

What: Which features are used how frequently?

When: After feature release and regularly (monthly)

How to Measure: Event tracking with analytics tools

Categorization:

- Core features (>60% usage): Heart of the product

- Power user features (20-60%): For advanced users

- Rarely used (<20%): Candidates for improvement or removal

Example analysis:

Feature A: 85% usage → Optimize, invest in performance

Feature B: 45% usage → Improve, make more visible

Feature C: 8% usage → Consider removing, creates maintenance burden

User Satisfaction (CSAT/NPS)

CSAT (Customer Satisfaction Score)

Question: "How satisfied were you with this feature/service?" (1-5 scale)

CSAT = (Satisfied Customers / Total Responses) × 100

Satisfied = Those who gave 4 or 5 points

Target: 80%+ CSAT score

NPS (Net Promoter Score)

Question: "How likely are you to recommend this product to a friend?" (0-10 scale)

NPS = %Promoters - %Detractors

Promoters: Those who gave 9-10 points

Passives: Those who gave 7-8 points

Detractors: Those who gave 0-6 points

Benchmark:

- NPS > 50: Excellent

- NPS 30-50: Good

- NPS 0-30: Improvement needed

- NPS < 0: Critical issue

Time to Value

What: How quickly does the user find initial value?

When: Critical for onboarding optimization

How to Measure: Time from signup to first important action

Example milestones:

- SaaS tool: Creating first report

- E-commerce: First purchase

- Project management: Completing first task

Improvement tactics:

- Interactive onboarding wizard

- Templates and examples

- Contextual help

- Progress tracking

Churn Rate

What: User loss rate

When: Critical in SaaS and subscription models

How to Calculate:

Monthly Churn Rate = (Start of Month Users - End of Month Users) / Start of Month Users × 100

Healthy churn rates:

- Consumer SaaS: 5-7%/month

- Enterprise SaaS: 1-2%/month

- Freemium: 10-15%/month

Categorizing churn reasons:

- Product-market fit: Product doesn't meet needs

- Onboarding: Poor initial experience

- Price: Product value doesn't justify perceived price

- Performance: Technical issues, downtime

- Support: Insufficient support

3. Process Metrics

Sprint Velocity

What: Amount of story points completed in a sprint

When: In every project using Scrum

How to Calculate:

Velocity = Average Completed Story Points of Last 3 Sprints

Example:

Sprint 1: 32 points

Sprint 2: 28 points

Sprint 3: 35 points

Velocity = (32 + 28 + 35) / 3 = 31.67 points

Velocity use cases:

- Sprint planning (how many points to commit?)

- Release planning (when will feature be completed?)

- Team capacity planning

Warning: Velocity should NOT be used for cross-team comparisons! Each team's story point perception is different.

Sprint Burndown

What: Daily tracking of remaining work in sprint

When: Daily throughout the sprint

Ideal burndown: Descent along a straight line

Real scenarios:

- Slow Start: Flat initial days, rapid descent final days → Planning deficit

- Scope Creep: Graph going up → New work being added to sprint

- Fast Completion: Early finish → Undercommitment or overestimation

Lead Time & Cycle Time

Lead Time: From when demand arrives until production deployment

Cycle Time:From when development starts until production deployment

Lead Time = Cycle Time + Waiting Time

Example:

Feature request: January 1

Development start: January 15 (14 days waiting)

Production deploy: January 29 (14 days cycle time)

Lead Time: 28 days

Cycle Time: 14 days

Targets:

- Lead time: <30 days

- Cycle time: <14 days

- Waiting time: Minimize

Improvement:

- Do backlog grooming regularly

- Apply WIP (Work in Progress) limits

- Resolve blockers quickly

- Optimize CI/CD pipeline

Deployment Frequency

What: How often are deployments made to production?

When: DevOps maturity indicator

DORA Metrics Benchmark:

- Elite: Multiple times per day

- High: Between once per day and once per week

- Medium: Between once per month and once per week

- Low: Less than once per month

High deployment frequency benefits:

- Fast feedback loop

- Small, safe changes

- Less risk

- Fast rollback

Code Review Time

What: Time from PR/MR opening to merge

When: For every PR

Target: <24 hours

Harms of long review times:

- Developer context loss

- Merge conflict risk

- Decreased team morale

- Delivery slowdown

Improvement tactics:

- Small PRs (<400 lines)

- Define code review SLA

- Sync review sessions instead of async review

- Automated checks (linting, tests)

Meeting Efficiency

What: Meeting effectiveness and time usage

When: Evaluate in regular retrospectives

Measurement criteria:

- Meeting duration (within budget?)

- Number of actions decided

- Participant satisfaction

- Actionable outcome rate

Meeting budget example:

Daily standup: 15 min × 5 days = 75 min/week

Sprint planning: 2 hours

Sprint review: 1 hour

Retrospective: 1.5 hours

Total: 4.5 hours + 1.25 hours = 5.75 hours/sprint/person

For 8-person team: 46 hours/sprint

Improvement:

- Make agenda mandatory

- Apply timeboxing

- Invite necessary people (minimize optional attendees)

- Encourage async communication

4. Technical Metrics

Code Coverage

What: Percentage of code tested

When: Automatically on every commit

How to Measure:Coverage tools (Jest, JaCoCo, Istanbul)

Targets:

- Critical path: 90%+

- Core business logic: 80%+

- Utility functions: 70%+

- Overall project: 70-80%

Warning:100% coverage target can be counter-productive. Quality tests are more important than high coverage.

What is a quality test?

- Tests edge cases

- Validates business logic

- Provides refactoring confidence

- Runs fast

Defect Density

What: Number of bugs per code

When: Before and after release

How to Calculate:

Defect Density = Number of Bugs / KLOC (1000 lines of code)

Example:

Codebase: 50,000 lines of code

Reported bugs: 25

Defect Density = 25 / 50 = 0.5 bugs/KLOC

Benchmark:

- Excellent: <0.5 bugs/KLOC

- Good: 0.5-1 bugs/KLOC

- Medium: 1-2 bugs/KLOC

- Poor: >2 bugs/KLOC

Technical Debt

What: Amount of technical debt to be paid in the future

When: Should be continuously tracked

How to Measure: Tools like SonarQube, CodeClimate

Technical debt categories:

1. Deliberate & Prudent

- We took shortcuts for fast delivery, will refactor

- Acceptable, planned payment

2. Deliberate & Reckless

- We did it fast without thinking about later

- Dangerous, should be avoided

3. Inadvertent & Prudent

- We now learned there's a better way

- Normal, learning process

4. Inadvertent & Reckless

- We didn't know what the right approach was

- Preventable with training and code review

Technical debt ratio:

TD Ratio = (Remediation Cost / Development Cost) × 100

Target: TD Ratio < 5%

MTTR (Mean Time to Recovery)

What: Average time to fix when system crashes

When: For production incidents

How to Calculate:

MTTR = Total Downtime / Number of Incidents

DORA Metrics Benchmark:

- Elite: <1 hour

- High: <1 day

- Medium: <1 week

- Low: >1 week

Improvement strategies:

- Comprehensive monitoring (Datadog, New Relic)

- Automated alerting

- Runbooks (incident playbook)

- Post-mortem culture

- Blameless retrospective

Change Failure Rate

What: What percentage of production deployments cause problems?

When: After each deployment

How to Calculate:

Change Failure Rate = (Failed Deployments / Total Deployments) × 100

DORA Benchmark:

- Elite: 0-15%

- High: 16-30%

- Medium: 31-45%

- Low: >45%

Failed deployment causes:

- Insufficient testing

- Missing rollback plan

- Complex deployment

- Poor staging environment

API Response Time

What: Response time of API endpoints

When: Continuous (real-time monitoring)

Targets:

- P50 (median): <200ms

- P95: <500ms

- P99: <1000ms

What is P95? 95% of requests complete under this time.

Slow API causes:

- N+1 query problem

- Missing indexes

- Unnecessary data fetching

- Synchronous external API calls

How to Create a Metric Dashboard?

Layered Dashboard Approach

- 1. Executive Dashboard (C-Level)

- ROI

- Revenue impact

- Time to market

- NPS

- Monthly update

- 2. Product Dashboard (PM, PO)

- Adoption rate

- Feature usage

- CSAT/NPS

- Churn rate

- Weekly update

- 3. Engineering Dashboard (Dev Team)

- Velocity

- Lead/cycle time

- Deployment frequency

- Bug metrics

- Code quality

- Daily update

- 4. Operations Dashboard (DevOps)

- Uptime

- MTTR

- API performance

- Error rates

- Real-time

Dashboard Principles

- 1. Actionable What action to take should be clear for each metoric.

Bad:Code Coverage 65%

Good:Code Coverage 65% (target 75%), tests needed for critical modules

- 2. Contextualized Metric alone is meaningless, context is needed.

- Show trend (last 6 months)

- Add target line

- Compare with benchmark

- 3. Simple Keep cognitive load low.

- Max 8-10 metrics per dashboard

- Use colors carefully (red = bad, green = good)

- Avoid jargon

- 4. Accessible Everyone should easily access their dashboard.

- URLs should be easy to remember

- Mobile-friendly

- Real-time or near real-time

Tool Recommendations

Analytics:

- Mixpanel, Amplitude (product analytics)

- Google Analytics (web traffic)

- Hotjar (heatmaps)

Project Management:

- Jira, Linear (sprint metrics)

- Notion, Confluence (documentation)

Code Quality:

- SonarQube, CodeClimate

- ESLint, Prettier

Monitoring:

- Datadog, New Relic (APM)

- Sentry, Rollbar (error tracking)

- PagerDuty (incident management)

Custom Dashboard:

- Grafana (open-source, highly customizable)

- Tableau, Power BI (enterprise)

- Metabase (business intelligence)

Common Metric Mistakes and How to Avoid Them

1. Getting Stuck on Vanity Metrics

What is a vanity metric? Looks good but doesn't translate to action.

Bad metrics:

- Total registered users (most may be inactive)

- Lines of code (doesn't show quality)

- Total number of features (unused features)

Good metrics:

- Active users (DAU/MAU)

- Code coverage and bug density (quality)

- Feature adoption and usage

2. Gaming the Metrics

If the team is evaluated by metrics, metrics get optimized, not the real goal.

Example: If bonus is given based on velocity, team starts inflating story points.

Solution: Look at multiple metrics, include qualitative feedback.

3. Ignoring Context

Metrics alone can be misleading.

Example: Velocity has dropped.

- Reason 1: Team performance dropped (bad)

- Reason 2: More complex tasks taken on (normal)

- Reason 3: A developer took sick leave (temporary)

Investigate changes in each metric.

4. Tracking Too Many Metrics

Trying to measure everything results in measuring nothing.

Pareto Principle: 80% of value comes from 20% of metrics.

Choose 3-5 core metrics per layer, others are secondary.

5. Not Taking Action

If you're collecting metrics but they're not translating to action, you're wasting time.

For each metric:

- What is the target value?

- Who is responsible?

- When will it be reviewed?

- What will be done if it's poor?

Metrics by Project Lifecycle

Discovery & Planning Phase

Focus: Business case validation

Core metrics:

- Opportunity size (market/TAM)

- Estimated ROI

- Competitive analysis

- User research findings

Development Phase

Focus: Efficient execution

Core metrics:

- Sprint velocity

- Burndown chart

- Lead/cycle time

- Code quality metrics

- Deployment frequency

Launch Phase

Focus: Smooth deployment

Core metrics:

- Deployment success rate

- Error rate

- API performance

- System uptime

- User onboarding completion

Growth Phase

Focus: Adoption and retention

Core metrics:

- Adoption rate

- Feature usage

- User retention

- NPS/CSAT

- Revenue metrics

Maturity Phase

Focus: Optimization and scaling

Core metrics:

- Operational efficiency

- Cost per transaction

- Technical debt ratio

- Team productivity

- Customer LTV

Metric Culture at Momentup

At Momentup, we embrace a data-driven approach in all our projects. Here's how we do it:

1. Transparent Metric Sharing

We share important metrics in every sprint review:

- Sprint velocity and burndown charts to track progress

- Code quality metrics from SonarQube

- Bug density and open bug trends

- Customer feedback highlights from recent releases

2. Metric Analysis in Retrospectives

We answer "How can we be better?" with data:

- If velocity dropped, we analyze causes

- If code review time is long, we identify bottlenecks

- If bugs increased, we perform root cause analysis

3. Client Dashboards

We prepare custom dashboards for our clients:

- Project progress (Jira/Linear integration)

- Quality metrics (SonarQube)

- Deployment status

- Sprint summary

4. Continuous Improvement

We carry what we learn from each project to the next:

- We update our benchmark database

- We document best practices

- We optimize our tool stack

Creating a Metric Strategy: 5 Steps

Step 1: Define Goals

Business goals should be clear:

- Revenue increase?

- Cost savings?

- User experience improvement?

- Accelerating time to market?

Step 2: Select Critical Metrics

2-3 core metrics for each goal:

- Choose actionable metrics

- Avoid vanity metrics

Example:

Goal: Increase user retention

Leading indicators:

- Onboarding completion rate

- Time to first value

- Feature engagement

Lagging indicators:

- 30-day retention rate

- Churn rate

- NPS

Step 3: Create Baseline

What is the current state?

- Collect 3-6 months of historical data

- Calculate mean and median values

- Observe trend

Step 4: Set Targets

Realistic but ambitious goals:

- Look at industry benchmark

- Look at own historical performance

- Consider resource constraints

SMART goals:

- Specific: Clear and specific

- Measurable: Measurable

- Achievable: Attainable

- Relevant: Relevant

- Time-bound: Time-limited

Step 5: Establish Review Rhythm

How frequently will it be reviewed?

- Daily:Operational metrics (uptime, errors)

- Weekly:Sprint metrics (velocity, burndown)

- Monthly:Product metrics (adoption, NPS)

- Quarterly:Business metrics (ROI, revenue)

Conclusion: Building a Data-Driven Culture

Metric tracking is not just about setting up dashboards. For a successful metric strategy:

1. Secure Team Buy-In

Metrics are for improvement, not punishment. The team must understand this.

What to do:

- Choose metrics together

- Answer the "Why" question

- Celebrate successes

- Create blameless culture

2. Be Iterative

Your first metrics might not be perfect. Test, learn, optimize.

3. Focus on Action

At every metric review, ask:

- What is this telling us?

- What needs to change?

- Who will take action?

4. Think Long-term

Short-term optimization can jeopardize long-term success.

Example: Compromising code quality to increase velocity.

Maintain balance: Speed + Quality + Sustainability

Data-Driven Project Management with Momentup

At Momentup, we don't just write code; we produce measurable value. In every project:

- We build metric infrastructure from day one

- We share metrics transparently in sprints

- We support decision-making with data

- We apply continuous improvement cycle

Our Agile methodologies and Scrum framework naturally include a metric-focused approach. From daily standups to sprint retrospectives, data speaks in every ceremony.

Are You Using the Right Metrics in Your Projects?

If you can't clearly answer these questions, it might be time to review your metric strategy:

- Is your project on the planned path, how do you know?

- Where is team performance?

- Are users adopting the product?

- Is technical debt at dangerous levels?

- Which features add value, which don't?

At Momentup, we're here to help you identify the right metrics in your projects, set up dashboards, and make data-driven decisions.

Get in touch and schedule a free metric assessment consultation.